Hi, I'm Brandon Carone.

A

Human-centered music & audio AI researcher (PhD candidate in final year at NYU MARL), bridging music cognition and machine learning. Open to industry research roles starting May 2026.

About

My research integrates music cognition, machine learning, and audio-capable LLMs, with a focus on how we can build models whose perceptual abilities better reflect human music perception. By combining cognitive science, large-scale behavioral and streaming-log analyses, machine listening, and systematic benchmarking of audio models, I aim to understand how people discover, hear, and remember music, and to unveil when and why AI models' perceptual judgments for music align or diverge from those of humans. My ultimate goal is to build music AI systems whose internal representations and error patterns are interpretable in psychological terms and better aligned with human music perception.

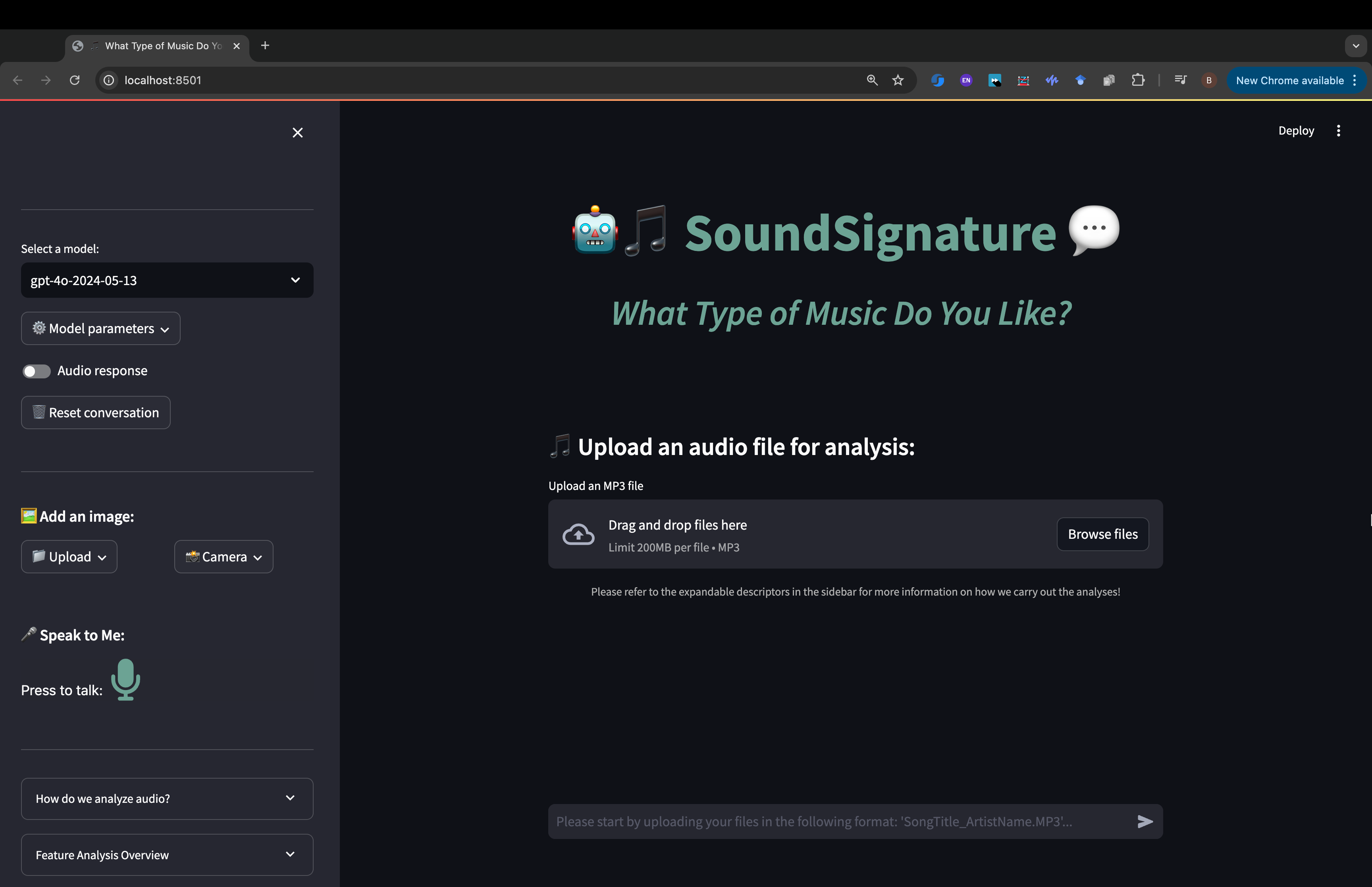

Recently, I led the development of the MUSE Benchmark, a suite of music tasks for probing music perception and abstract, relational reasoning in audio-capable LLMs. This work has been submitted to ICASSP 2026 and forms the basis for ongoing evaluations of state-of-the-art audio models. I also led a LogicLM-style prompting study using a subset of the tasks and the stimuli from the MUSE benchmark. This work presented as a poster at NeurIPS in 2025, as an oral presentation at the AAAI 1st International Workshop on Emerging AI Technologies for Music, and will appear in the Proceedings of Machine Learning Research (PMLR). Previously, I developed SoundSignature, an interactive app that uses music information retrieval and a custom LLM to analyze listeners’ favorite tracks and provide personalized, educational feedback about their musical preferences.

Beyond academic work, I have industry-facing experience as a Research Scientist Intern at Deezer, where I investigate cognitively informed models of music discovery using large-scale streaming data, and as a consultant for EEG-based generative music applications. These projects reflect my broader goal: to bridge human-centered music cognition with modern AI systems in ways that are both scientifically grounded and practically impactful for music technology.

- Programming: Advanced: Python, MATLAB, R | Intermediate: C++, JavaScript, HTML

- Research: Machine Learning, Computational Modeling, GLMMs, Inferential Statistics

- Audio and Music: Librosa, Essentia, MIRToolbox, madmom, mirdata, mir_eval, music21, mido

- Frameworks: PyTorch, TensorFlow, Keras, Streamlit, Node.js

- Tools & Technologies: Git, Logic Pro X, PsychoPy, JIRA

My current focus is on building benchmarks, models, and applications that close the gap between how humans and AI systems perceive music—making music technologies that are not only powerful, but also cognitively plausible and genuinely useful to listeners, creators, and researchers.

Education

New York, NY

Degree: Doctor of Philosophy (PhD) in Cognition and Perception

Expected Graduation: May 2026

- Advisor: Professor Pablo Ripollès, Music and Audio Research Lab (MARL)

- Fellowship: Dean’s Doctoral Fellowship

- Relevant Coursework: Deep Learning, Computational Cognitive Modeling, Music Information Retrieval, Auditory Perception, Time Series Analysis

University of California, Los Angeles

Los Angeles, CA

Degree: Bachelor of Science (BS) in Cognitive Science

Graduation: June 2019

- Specialization: Computing

- Honors: Graduated with Honors

- Thesis: Clinically studied or clinically proven? Memory for claims in print advertisements

Projects

A benchmark for probing music perception and abstract, relational reasoning in humans and LLMs.

- Ran large-scale evaluations on both humans and models such as Gemini, Qwen, and Audio-Flamingo using zero-shot, few-shot, and chain-of-thought prompting paradigms.

- Developed analysis scripts and GLMM-based statistical workflow to compare human and model performance across tasks and prompting strategies.

- Tools: Python, R (lme4), HPC, audio-LLM APIs, GitHub

Evaluating logic-style prompting strategies to improve structured reasoning in audio-capable LLMs.

- Adapted LogicLM-style prompting to music perception tasks, comparing standard, chain-of-thought, and constrained reasoning prompts on the MUSE Benchmark.

- Analyzed how different prompting schemes affect error types (e.g., rhythmic vs harmonic mistakes) and alignment with human judgments.

- Built logging and evaluation pipelines to track per-task accuracy, confidence, and reasoning patterns across multiple models and seeds.

- Tools: Python, JSON log parsing, Matplotlib, audio-LLM APIs, GitHub

Developed an app that integrates MIR with AI to analyze users' favorite songs.

- Developed SoundSignature, an interactive application that integrates MIR with AI to analyze users’ favorite songs and provide personalized insights into their musical preferences

- Tools: Python, Machine Learning, MIR, Natural Language Processing

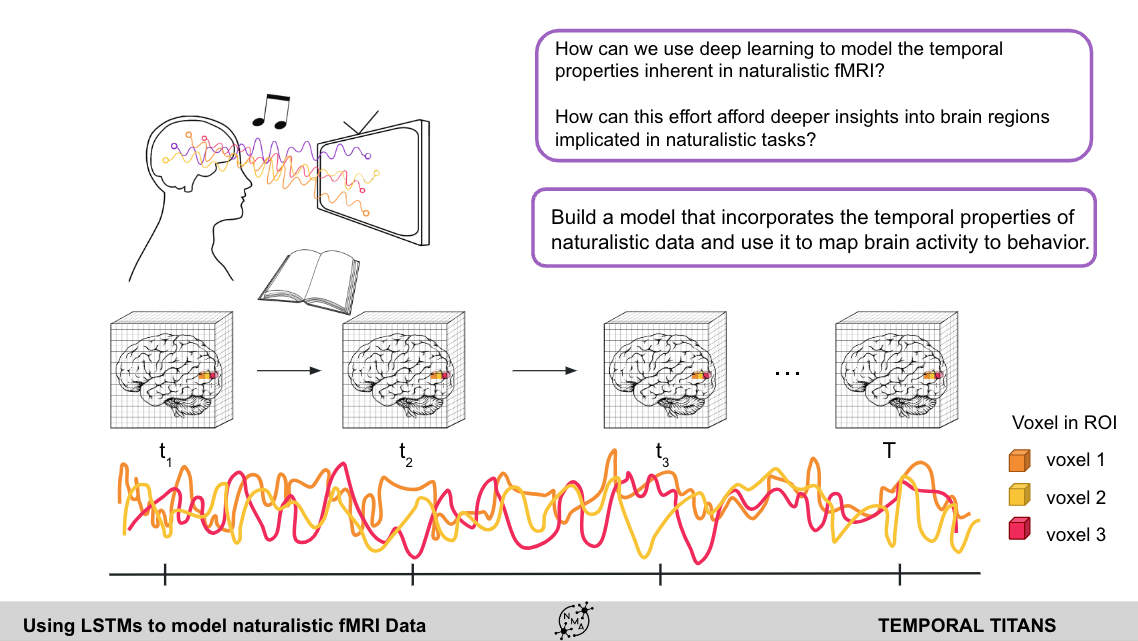

Developed and optimized LSTM networks to analyze fMRI data.

- Used in NeuroMatch Academy to explore fMRI data and its applications in understanding brain activity patterns, such as classifying social vs. nonsocial interactions.

- Tools: Python, PyTorch, Keras, Machine Learning, fMRI Analysis

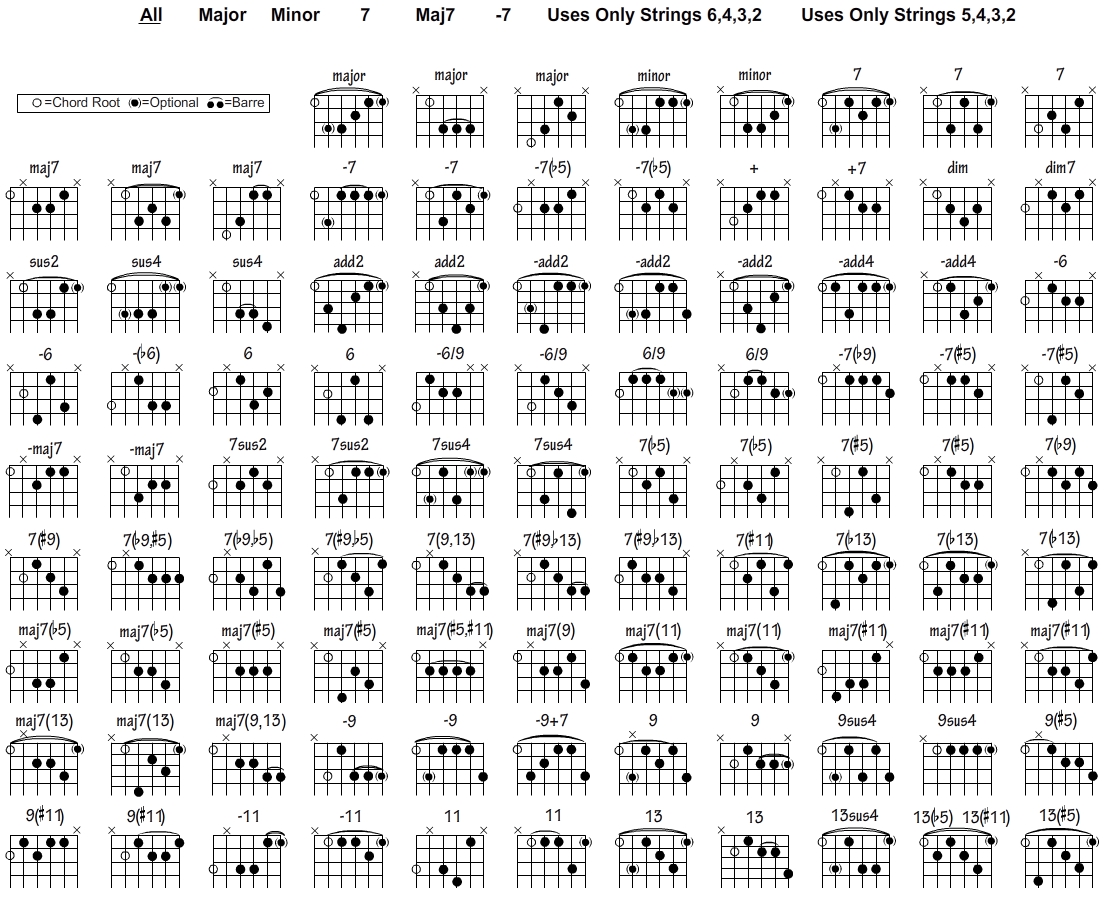

Modified CREMA model to explore alignment of human perception with the model's deep features.

- Implemented to assess how human perception of chord similarity aligns with the CREMA model's deep feature representations.

- Collected and analyzed human similarity judgments on ii-V-I chord variations.

- Tools: Python, Machine Learning, Tensorflow, Keras, Audio Analysis, Chord Recognition

Experience

- Built the MUSE Benchmark, which is an experimental framework to assess music perception and auditory reasoning in multimodal LLMs using 10 different listening tasks that span melody, rhythm, harmony, and timbre, among others.

- Evaluated four SoTA audio LLMs and compared their results with a large human sample (N=200). Across tasks, we found a persistent human–machine gap on abstract musical reasoning (especially in harmony and meter).

- Exploring the neural mechanisms supporting music memory (i.e., what makes a song memorable?) using both behavioral and fMRI data. Using ratings of pleasure and a computational model of music novelty, we show that songs are better remembered when they are highly pleasurable to listen to and novel at the same time.

- Investigated the cognitive mechanisms underlying music discovery by integrating large-scale streaming data analyses with frameworks from cognitive science.

- Developed and formalized models linking familiarity, novelty, and cognitive effort to listener engagement and memory, framing discovery as a gradual process rather than an instantaneous event.

- Implemented predictive and neural models that incorporate user embeddings, familiarity decay, and session-level cognitive load to estimate the likelihood of a song being liked.

- Co-authored a paper (in preparation) proposing a cognitively informed framework for music recommender systems that operationalizes mental load, familiarity decay, and the dynamics of repeated exposure.

- Guiding improvements in software that reads signals from a consumer EEG headset and feeds them to an adaptive ML algorithm to create meditative music from real-time brain activity.

- Advising on research strategy for clinical studies exploring the psychological benefits of adaptive music generation.

- Conducted research using neuroimaging (MRI, fMRI, DTI, ASL), audiology, eye-tracking, and neuropsychological assessments to evaluate cognition, perception, and brain health.

- Operated structural MRI and multi-band functional MRI scans with children aged 8-12 to study reward sensitivity and obesity risk.

- Processed neuroimaging data using HCP Pipelines, Freesurfer, FSL, and AFNI.

- Conducted medical assessments and patient interviews for protocol management.

- Led a team of five in supporting the Founder/Director with operations, fundraising, research, and legal tasks for a nonprofit creating musical support groups for patients with neurodegeneration.

- Implemented the Public Education and Awareness Platform and launched the “Meet the Expert” Podcast, now with 19 episodes.

- Designed the website and managed Google Ads campaigns, securing a $10,000/month in-kind ads grant.

- Completed an honors thesis examining false memories for print advertisements with a sample of 500+ participants from the local community and Amazon Mechanical Turk.

- Received grant to conduct an independent research study investigating the effects of listening to different musical genres on memory formation.

- Coded the experiment and analyzed data using JASP.

Publications

Peer-Reviewed Articles & Proceedings

- Carone, B. J., Roman, I. R., & Ripollés, P. (2026). LLMs can read music, but struggle to hear it: An evaluation of core music perception tasks (In press). To be published in the Proceedings of Machine Learning Research. https://openreview.net/forum?id=hKE8tQzueC

- Groves, K., Farbood, M. M., Carone, B. J., Ripollés, P., & Zuanazzi, A. (2025). Acoustic features of instrumental movie soundtracks elicit distinct and mostly non-overlapping extra-musical meanings in the mind of the listener. Scientific Reports, 15, 2327. https://doi.org/10.1038/s41598-025-86089-6

- Carone, B. J., & Ripollés, P. (2024). SoundSignature: What Type of Music Do You Like? Proceedings of the IEEE 5th International Symposium on the Internet of Sounds (IS2).

- Murphy, D. H., Schwartz, S. T., Alberts, K. O., Siegel, A. L. M., Carone, B. J., Castel, A. D., & Drolet, A. (2023). Clinically studied or clinically proven? Memory for claims in print advertisements. Applied Cognitive Psychology, 37(5), 1085–1093.

Workshop Papers

- Carone, B. J., Roman, I. R., & Ripollés, P. (2025). Evaluating Multimodal Large Language Models on Core Music Perception Tasks. NeurIPS 2025 Workshop AI for Music: Where Creativity Meets Computation. https://arxiv.org/abs/2510.22455

Under Review

- Carone, B. J., & Ripollés, P. (2025). The Effects of Novelty and Abstract Reward on Memory Performance.

- Carone, B. J., Roman, I. R., & Ripollés, P. (2025). The MUSE Benchmark: Probing Music Perception and Auditory Relational Reasoning in Audio LLMs. https://arxiv.org/abs/2510.19055

In Preparation

- Carone, B. J., Sguerra, B., Escobedo, G., Tamm, Y. M., & Bonnin, G. (2025). Discovery-Oriented Music Recommendation: The Role of Cognitive Effort and Familiarity.

- Carone, B. J., Abrams, E. B., & Ripollés, P. (2025). The Neural Mechanisms of Novelty and Abstract Reward in Memory Performance.

- Rodríguez-Vázquez, R., Carone, B. J., Groves, K., Namballa, R., Zuanazzi, A. R., & Ripollés, P. (2025). Identification of Basic Emotions Through Language Rhythms.

Talks & Media

Conference Presentations

- Carone, B. J., Roman, I. R., & Ripollés, P. (2026). LLMs can read music, but struggle to hear it: An evaluation of core music perception tasks. Oral presentation at the AAAI 1st International Workshop on Emerging AI Technologies for Music. https://openreview.net/forum?id=hKE8tQzueC

- Carone, B. J., Roman, I. R., & Ripollés, P. (2025). Evaluating Multimodal Large Language Models on Core Music Perception Tasks. Poster presented at the NeurIPS 2025 Workshop AI for Music: Where Creativity Meets Computation. Poster

- Carone, B. J., & Ripollés, P. (2024, October). SoundSignature: What Type of Music Do You Like? Oral presentation at the 5th annual IEEE International Symposium on the Internet of Sounds (IS2 2024), International Audio Laboratories, Erlangen, Germany.

- Carone, B. J., Abrams, E. B., & Ripollés, P. (2023, November). The Effects of Novelty and Abstract Reward on Memory Performance. Poster presented at the Society for Neuroscience, Washington, D.C.

- Carone, B. J., Merritt, V. C., Jurick, S. M., & Jak, A. J. (2021, February). Effects of Major Depressive Disorder on Veterans. Poster presented at the International Neuropsychological Society, San Diego, CA.

- Carone, B. J., Siegel, A. L. M., Castel, A. D., & Drolet, A. (2019, May). False memory for print advertisements. Poster presented at multiple conferences.

Invited Talks

- Carone, B. J. (2025, October). The MUSE Benchmark: Probing Music Perception and Auditory Relational Reasoning in Audio LLMs. Invited speaker in Marcus Pearce’s Lab Meeting at the Queen Mary University of London.

- Carone, B. J. (2025, October). The MUSE Benchmark: Probing Music Perception and Auditory Relational Reasoning in Audio LLMs. Guest Lecturer for the Artificial Intelligence course at the Queen Mary University of London.

- Carone, B. J. (2025, October). The MUSE Benchmark: Probing Music Perception and Auditory Relational Reasoning in Audio LLMs. Invited speaker for the Machine Listening Group at the Queen Mary University of London.

- Carone, B. J. (2025, September). Modeling Successful Music Discovery. Guest Lecturer at Deezer in Paris, France.

- Carone, B. J. (2025, August). Do You Hear What I Hear? Music Perception in Minds and Machines. Guest Lecturer at Deezer in Paris, France.

- Carone, B. J. (2024, July). SoundSignature: What Type of Music Do You Like? Guest Lecturer at the Deep Learning for Music Information Retrieval II: State-of-the-Art Algorithms Workshop at the Center for Computer Research in Music and Acoustics at Stanford University.

- Carone, B. J. (2024, October). Music and AI: Theoretical and Practical Perspectives. Guest Lecturer at Queen Mary University of London.

Media

- Carone, B. J., Zatorre, R. J. (Interviewees) & Zhu, X. (Host). (2022, October 6). Cognitive Neuroscience of Music and Memory [Audio podcast]. Research Journey Initiative.

- Carone, B. J. (Interviewee) & Bowes, P. (Host). (2019, April 4). Why music helps us age better [Audio podcast]. Live Long and Master Aging Podcast.

- Carone, B. J., Rosenstein, C. P. (Interviewees) & Sharp, R. (Producer). (2018, October 10). Music Mends Minds [Radio show]. BBC Radio 5 Live’s Up All Night with Rhod Sharp.

Skills

Programming

Python

Python

MATLAB

MATLAB

R

R

C++

C++

JavaScript

JavaScript

HTML

HTML

Libraries

NumPy

NumPy

Pandas

Pandas

Librosa

Librosa

scikit-learn

scikit-learn

matplotlib

matplotlib

Essentia

Essentia

Frameworks and Deep Learning

PyTorch

PyTorch

TensorFlow

TensorFlow

Keras

Keras

Other Tools and Technologies

Git

Git

Logic Pro X

Logic Pro X

Streamlit

Streamlit

PsychoPy

PsychoPy

Honors & Awards

2024 – Awarded $650 to attend the IEEE International Symposium on the Internet of Sounds (IS2 2024).

2022 – Received $5000 grant for research in fMRI studies.

2021 – Fellowship awarded for doctoral research excellence.

2018 – Recognized for research experience and academic performance.

2018 – Awarded $6500 for summer research in neuroscience.

2017 – Received $2000 for academic achievement and research potential.

2016 – Awarded $10,000 for resilience and determination in overcoming adversity.

2016 – Recognized for outstanding academic performance and service.

2015 – Awarded $40,000 for academic excellence in STEM.

2015 – Received $4000 and inducted into the Alumni Scholars Club.

2015 – Awarded $2500 for academic success and community service.

2015 – Awarded $1000 for exemplary performance in high school.

Memberships & Organizations

Professional Memberships

Clubs

Service & Reviewing

- Program Committee Member & Reviewer, NeurIPS 2025 Workshop AI for Music: Where Creativity Meets Computation.

- Program Committee Member & Reviewer, IEEE International Symposium on the Internet of Sounds (IS2 2025).